large-Scale Tv Dataset

Multimodal Named Entity Recognition

(STVD-MNER)

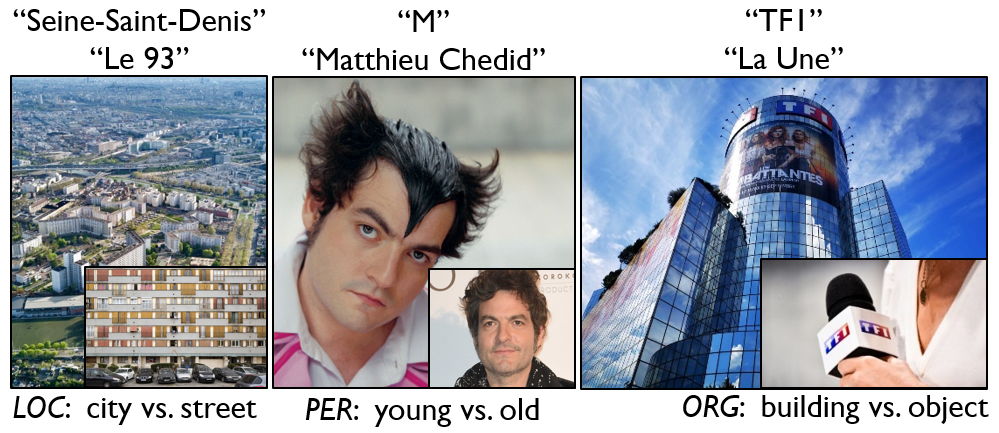

The STVD-MNER dataset is related to the Multimodal Named Entity Recognition (MNER) task in video. The MNER in video is an established task in the Computer Vision (CV), Audio Signal Processing (ASP) and Natural Language Processing (NLP) fields which uses visual, audio and textual information to detect Multimodal Named Entities (MNEs) as well to identify their types (e.g. location, person, organization, etc.). The next figure gives examples of MNEs including different representations and types. As the STVD-MNER is taking part of the STVD collection, the multimodality is derived from television content appearing in Electronic programming guides (EPG), TV Audio/Video (A/V) streams.

The STVD-MNER dataset is provided in a β version with an Hello World test set. This Hello World test set contains about 820 hours of audio/video data with ≈9K textual Named Entities (NEs).

It is characterized into two distinct subsets of collections

called top and last.

The top subset contains ≈85% of NEs dispatched in ≈⅓

of the dataset. The last subset covers the ≈⅔

and has few (or none) NEs per collection.

Here, the MNER task has to be driven from scratch

with the transcriptions of audio streams.

A pending Full test set would cover several thousands of A/V data.

The dataset is composed of different parts provided as:

- a root directory for each TV channel,

- for each TV channel, collection subdirectories,

- for each collection subdirectory, a set of JSON files containing the lists and types of NEs,

- for each collection subdirectory, daily subdirectories containing the files of broadcast events (EPG, audio, transcript, video). The EPG data are provided as CSV files summarizing XMLTV content. The audio/video files are encoded with the MPEG-4 format. The transcripts are provided as SRT and TXT files with and without the timestamps.

The following naming convention is applied within the dataset:

CX |

/ |

NameCode |

/ |

NEs_list_imdb.json |

||

NEs_list_stvdkgstr.json |

||||||

NEs_list_stvdkgall.json |

||||||

day |

/ |

ts_epg.csv |

||||

ts_video.mp4 |

||||||

ts_audio.mp4 |

||||||

ts_transcript_wt.srt |

||||||

ts_transcript_wt.txt |

||||||

ts_transcript_wb.srt |

||||||

ts_transcript_wb.txt |

||||||

ts_transcript_ws.srt |

||||||

ts_transcript_ws.txt |

-

CXis a directory name for a TV channel whereCXhas a label{France2, France3, France5, C8, ARTE, W9, TF1}, -

NameCodeis the name for a subdirectory containing a collection, it has a normalized ASCII codea-z,0-9with an empty symbol_(e.g.le_grand_betisier). TheNameCodehas a normalized lenght of80characters, -

NEs_list_Xare labels used for naming the list of NEs with three templatesX = {imdb, stvdkgall, stvdkgstr}. IMDb and STVD-KG are the two web sources for extracting the NEs.{stvdkgall, stvdkgstr}result of the application of two parametters settingΔKGandΔA/Vas detailled below, -

dayandtsare two timestamps for naming the subdirectories and files of broadcast events. They have the templates | YEAR | MONTH | DAY | forday, e.g. 20250529,

and | YEAR | MONTH | DAY | _ | HOURS | _ | MINUTES | forts, e.g. 20250529_09_55, fixed with the starting time of a broadcast event, -

the subdirectories of broadcast events have the label

day, -

the EPG files have the label

ts_epg, -

the audio/video files have the labels

ts_audioandts_video, respectivly, -

the transcript files have the labels

ts_transcript_X, whereX = {wt, wb, ws}, resulting of the Speech-To-Text (STT) suite Whisper using the 3 transcription models{tiny, base, small}. The transcriptions are provided with and without subtitles using the two formats SRT and TXT (resulting in 6 transcription files per audio file).

For the needs of visualization and testing, some samples (NEs and types with their related audio and video segments) are given in the next table.

| NE | Type | Audio | Text | Video | |

| sample 1 | Gibbs | PER | 1_a | 1_t | 1_v |

| sample 2 | Saint-Denis | LOC | 2_a | 2_t | 2_v |

The dataset is available for non-commercial research purposes.

Before to download the dataset, get the agreement (in English or French version) and sign it.

Then, send the scanned version to Mathieu Delalandre ![]() .

After verifying your request, we will contact you with the password to unzip the dataset.

.

After verifying your request, we will contact you with the password to unzip the dataset.

The files constituting the dataset are given here with general statistics. For a better accessibility, the dataset is provided as different archive packages of 16 GB. Our storage service at the UT delivers at 3-16 MB/s for downloading (from a low / high speed connection, respectively) with concurrent access. It would require a near 30 to 45 minutes of downloading per package. All the packages are needed to uncompress the dataset.

| Duration (h) | Channels | Collections | NEs files | NEs | EPG files | A/V files | Transcripts | Size (GB) | Packages | Link |

| 819 h | 7 | 284 | 439 | 9,256 | 843 x1 | 843 x2 | 843 x6 | 281 | 18 | download |

For the needs of clarification, we introduce here technical / scientific aspects about STVD-MNER dataset. Further information could be find in the research papers [1,2].

-

NEs and types:

have been extracted with a robust approach [1] processing with Named Entity Recognition (NER)

and Named Entity Linking (NEL) to build-up the Knowledge Graph (KG)

STVD-KG

from EPG (eg. xmltvfr.fr).

The KG STVD-KG is linked to the Web database IMDb.

Within STVD-KG and IMDb, the NEs are provided into 3 categories

{PER, LOC, ORG}(i.e., for person, localisation and organization). The two time intervalsΔKG,ΔA/Vof a collection between the KG and the A/V data differs such asΔKG ≫ ΔA/V. Requests within the KG have been done using the two intervals for an overall and strict extraction of NEs. The next table gives the statistics of extracted NEs among the two sources (STVD-KG and IMDb) and extraction methods (overall and strict). This results in 3 lists of NEs{imdb, stvdkgall, stvdkgstr}dispatched into the 3 categories{PER, LOC, ORG}. For the needs of clarification, theUnion = imdb ∪ stvdkgallis given (consideringstvdkgstr ∈ stvdkgall). Let's note that somePERNEs could appear in duplicate between the two sources{imdb, stvdkgall}(why we refer the process as anUnion).

The NEs appear at different levels in the A/V collections respecting a near exponential distribution. The smallest subset of collections, calledPER LOC ORG Total imdb2,929 0 0 2,929 stvdkgstr326 206 7 539 stvdkgall3,732 2,496 99 6,327 Union≤ 6,661 2,496 99 ≤ 9,256 top, covers the main distribution of NEs. The next table provides its statistics. Thetopsubset contains≈85%of NEs dispatched in≈⅓of the dataset. The largest set of collection, calledlast, covers the≈⅔and has too few (or none) NEs per collection. Here, the MNER task has to be drivenfrom scratch

with the transcriptions obtained from audio streams.Collections Duration NEs Min Mean Max top70 (24.6%) 251.7h (30.7%) 7,977 (86.2%) 40 114 893 last214 (75.4%) 567.4h (69.3%) 1,279 (13.8%) 0 6.14 39 all284 (100%) 819.1h (100%) 9,256 (100%) 0 32.6 893 -

A/V data: the A/V data have been captured as detailed in [2] with adaptations.

For quality improvement, the audio has been encoded at

256kbpssupported by channels of the French DTT. The video data have been encoded at1.6Mbpsand a SD resolution (720×576, 30 FPS) for a best trade-off between the quality and memory cost. Similar to [2], parameters have been applied to map the captured A/V data to collections where the startt0and endt1times of a broadcast event have been corrected intot0-,t1+, respectively. The A/V capture is asynchrous on the TV Workstation [2], the session capture has been bounded to5×4ha day to minimize the latency between the audio and video. This latency is expressed asL=dA-dVwithdAanddVthe audio and video durations, wheredVobtained with hardware encoding is the real-time. That is, the latency is negative withL ∈ ]-19,-13.9[seconds (seelatency). This latency can be expressed a linear functionL(t)withL(t=4h)=Lmin=-19seconds. TheL(t)distribution is then uniform having as an average=Lmin/2=-9.5seconds and an error gap∓ ε =|Lmin|/2=9.5seconds. Consideringt0andt1the timestamps of an audio segment, the mapping with the video segment is obtained ast0+|Lmin|/2-ε=t0andt1+|Lmin|/2+ε=t1+|Lmin|. -

STT: the transcript files have been generated using

the Whisper STT suite

with 3 models

{tiny, base, small}. They are provided as SRT and TXT files with and without the timestamps. The STT requires a huge computation time. To process then=843audio files of the dataset (having a total duration of819h) a parallel processing have been deployed. This uses a high performance computer (DELL 5820 computer, CPU Intel Xeon W-2295,kmax=36threads, 256 GB of RAM, 36 TB of disk capacity) and a multithreading implementation (a threading parameterkis fixed for each model{tiny, base, small}, where each thread[1, k]processes in batch≈ n/kfiles). The optimumkparameters have been fixed for every model{tiny, base, small}from the interpolation of Throughput (Th) curves (x=k, y=Th) preventing the CPU/memory trashing. The overallThis derived from the multithreading processing and computed as withDithe duration of the audio data to process by the threadiandRTiits response (or execution) time. For time synchronization, it is important to have an equal duration of audio data to process per thread such asDi ≈ 819h/k. This is known as the partitioning problem intokequal sum subsets that is NP-hard with a complexityO(kn)(e.g. withk=36andn=850we haveO(kn)>10103solutions). It can be solved with different algorithms like the Greedy or Backtracking resulting in aRTvariation of≈5℅between thekthreads. Based on these different mechanisms, the obtained optimumThfor the models{tiny, base, small}are given here with, as well, the overall times to process the dataset.

tiny |

base |

small |

|

Th |

42.5 | 30.9 | 11.9 |

Dmax |

19.3h | 26.5h | 68.8h |

- H.G. Vu, N. Friburger, A. Soulet and M. Delalandre. stvd-kg: A Knowledge Graph for French Electronical Program Guides. International Conference on Web Information Systems Engineering (WISE), 2025.

- F. Rayar, M. Delalandre and V.H. Le. A large-scale TV video and metadata database for French political content analysis and fact-checking. Conference on Content-Based Multimedia Indexing (CBMI), pp. 181-185, 2022.