large-Scale Tv Dataset

Parallel Machine Scheduling

(STVD-PMS)

The STVD-PMS dataset is related to the problem of Parallel Machine Scheduling (PMS) for the capture of live videos within the TV Workstation [1-4]. This PMS problem can be characterized as follows.

-

There is a limited number m of machines for the video capture in the TV Workstation

e.g. m=8 [1,2] or m=4 [3]. We have m is ≪ ch the number of channels (e.g. 30). -

The video capture cannot be delayed, the jobs 1,···, j, ···,n have static

starting / ending times sj, ej. -

The TV is broadcasted with a latency, this latency can be characterized:

-

offline as a Gaussian function with a video detection method [1,2].

The function parameters can be used to obtain shifted starting / ending times

ŝk,êk for a job such as,

ŝj=sj-|Lmin| êj=ej+Lmax with Lmin=μ-kσ, Lmax=μ+kσ (typical values for k are 2 to 3). That is, two consecutive jobs i, j within a same channel can be captured at [ŝi; êj]. -

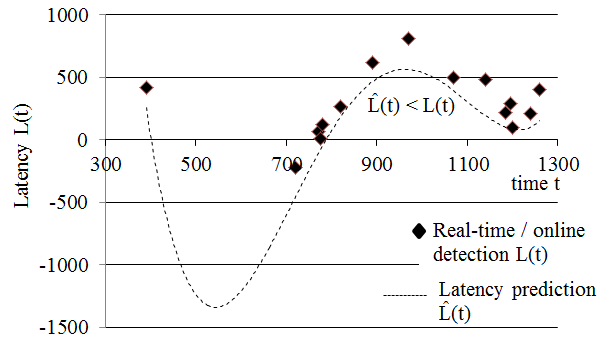

online as a latency function L(t) designed with methods of real-time detection and time series forecasting.

A real-time detection [3] can be used to get samples of latency L(t) for the TV channels.

These samples L(t) serve to get predictions L̂(t) and drive the PMS

(L̂(t) < L(t) ∀ t for a safe capture).

A L̂0(t) parameter has to be fixed to initiate prediction,

a baseline approach is to set L̂0(t) = Lmin.

-

offline as a Gaussian function with a video detection method [1,2].

The function parameters can be used to obtain shifted starting / ending times

ŝk,êk for a job such as,

- The PMS can handled with multiple criteria e.g. aging of TV progams, their categories, the channel ids, etc.

Two collections are proposed in the dataset with offline and online latency, respectively. The process to constitute these collections is detailed here.

- The two collections have been obtained from an handmade crawler [1, 2] to get the metadata of French TV programs on the period December 2020 to May 2021.

- The TV programs are repeated (e.g. daily and weekly programs). A hashing method was applied [1, 2] ensuring a good precision for grouping.

- The TV metadata are not concistent. Timestamping errors could appear (e.g. sj > ej or ej - sj ≃ 0). Programs can be scheduled too at a same time within a channel (e.g. ei > sj). A post-processing was applied to guaranty consitent jobs for capture (no negative or null durations, a single program broadcasted per channel and time interval).

The different files constituting the dataset are given below protected with a password.

The dataset is available for non-commercial research purposes.

Before to download the dataset, get the agreement (in english or french version) and sign it.

Then, send the scanned version to Mathieu Delalandre ![]() .

After verifying your request, we will contact you with the password to unzip the dataset.

.

After verifying your request, we will contact you with the password to unzip the dataset.

The two collections composing the dataset are given in the next table. They are provided as CSV files having the format

Channel; Hashcode; Start; Stop; Latency(s); Mean(s); Std(s) where

Channelis an integer corresponding the the channel id,Hashcodeis a long (64 bits) given with an hexadecimal coding,-

start; stopare timestamps having the format

YEAR | MONTH | DAY | HOURS | MINUTES | SECONDS, -

Latency(s)is an integer corresponding to the online Latency L(t) (in seconds). It is obtained with a real-time method for video detection [3]. It has a valueNULLfor the predictions and the offline collection, -

Mean(s); Std(s)are integers corresponding to the offline Latency parameters μ and σ obtained with a video detection method [1,2].

e.g.

1; 00808c3868b1e82f; 20210130204500; 20210130205000; 273; 117; 245

For the needs of testing, some samples of the two collections are given here sample_1, sample_2.

| Collection | Latency | Duration | Period | Channels | Jobs | Hashcodes | m | Link |

| Collection 1 | Offline | 170 days | 12/20 - 05/21 | 26 | 99k | 5,615 | 8 | download |

| Collection 2 | Online | 30 days | 02/21 | 8 | 6k | 223 | 2;4 | download |

For the needs of kick-off, the STVD-PMS dataset is provided with an "hello world" algorithm for processing. The paralell scheduling of jobs within the dataset can be characterized as a Weighted Job Interval selection Problem (WJISP). This could be adressed with the parameterized algorithm GREEDYα [ErlebachT2001]. A C++ implementation of that algorithm is provided here PRD_GreedyAlpha (with functions to format XMLTV files into CSV). The output of the algorithm could used for characterization but also for an online control of the video capture. This requires a video platform (e.g. [4]) with a control solution. The TvStation_Remote project provides a library for the InfraRed control of video devices using PhidgetIR sensors.

Please cite the following papers, in english [1] or french [2], if you use this dataset.

- V.H. Le, M. Delalandre and D. Conte. A large-Scale TV Dataset for partial video copy detection. International Conference on Image Analysis and Processing (ICIAP), Lecture Notes in Computer Science (LNCS), vol 13233, pp. 388-399, 2022.

- V.H. Le, M. Delalandre and D. Conte. Une large base de données pour la détection de segments de vidéos TV. Journées Francophones des Jeunes Chercheurs en Vision par Ordinateur (ORASIS), 2021.

- V.H. Le, M. Delalandre and D. Conte. Real-time detection of partial video copy on TV workstation. Conference on Content-Based Multimedia Indexing (CBMI), pp. 1-4, 2021.

- M. Delalandre. The TV Workstation project: a research scope. FICT seminar, Thanh Hóa, Vietnam, 25th of October 2022.